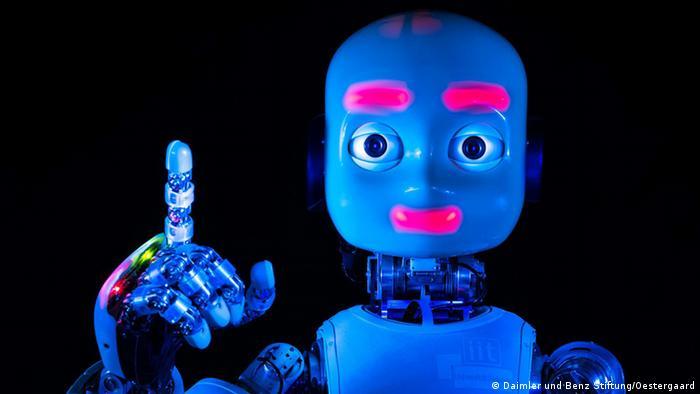

Artificial intelligence .. Is it close to human intelligence?

The congruence of human intelligence is a goal of artificial intelligence.

Alan Turing

John Thornhill in London It can output emails, computer codes, Internet advertisements, video game plot lines, short recurring segments in songs, and give Halloween costume suggestions. He could also write very beautiful and persuasive poems in the spirit of Emily Dickinson, prose in the style of Ernest Hemingway, and even, if asked, an imaginary conversation between Dickinson and Hemingway.

The possibilities seem almost endless. So when I had the opportunity to interact with GPT-3, a new language-generating model that has caused quite a stir in the AI world over the past few months, I asked for his help crafting a new autobiography, featuring heroine Luke Skywalker.

Since it has been trained on almost all scripts accessible on the Internet, GPT-3 is surprisingly fast, large, and versatile. Here's what I immediately produced: "John Thornhill was already a decorated war hero when he joined the Rebel Alliance. He was expert in an automatic weapon that fires bursts of energy, and is intuitive when it comes to the art of war, but his real skill lies in his squadron. After Rising to command his own squadron in the Rebellion, Jon led his team through countless battles, making him an unwavering leader with a true Rebel spirit." I think it's better for me now to update my business cards.

Thanks to recent advances in machine learning, language generation systems are becoming increasingly popular. The Quill device from Narrative Science can absorb data on the performance of a portfolio of stocks and write summaries for investors, for example. But in terms of sheer adaptability, none of them can match GPT-3, which was unveiled in May by OpenAI, a San Francisco-based research firm. At an estimated cost of $12 million, the model contains 175 billion language arguments, 100 times more than the previous prototype. It is, to modify a phrase by pioneering British computer scientist Alan Turing, the most impressive "imitation" machine built to date.

Turing was one of the first people to imagine how the world would be transformed by machines that could think. In a paper he published in 1950 entitled "Computational Machines and Intelligence", he explained that computers might one day become so good at impersonating humans that it would be impossible to distinguish them from living things of flesh and blood. “We may hope that machines will eventually compete with men in all purely intellectual fields,” he wrote.

Such universal computing machines would be able to win what he called an "imitation game" by convincing a person in an electronic dialogue that they are interacting with another human, although some now argue that the so-called "Turing test" may be more indicative. On human naivety than on the true intelligence of the machine.

Seventy years after Turing's article, thanks to the rapid expansion of the Internet and exponential increases in computing power, we have moved into a machine-enabled world that would surpass even Turing's imagination. As a result of new software technologies, such as neural networks and deep learning, computer scientists are getting much better at guiding machines to play the game of imitation.

Some who have experienced GPT-3 say it shows a glimmer of real intelligence, marking an important step toward the ultimate endpoint of artificial intelligence: AGI, when cyber intelligence matches nearly humankind across every intellectual field. Others dismiss this as nonsense, pointing to GPT-3's ludicrous flaws and arguing that we are still several conceptual breakthroughs away from creating any such superintelligence.

According to Sam Altman, the 35-year-old dead-faced CEO of OpenAI who is one of the most prominent figures in Silicon Valley, there is a reason smart people are so excited about GPT-3. “There is evidence here of a first foray into general-purpose AI – a single system that can support a very large number of different applications and really scale up the kinds of software we can build,” he says in an interview with the Financial Times. I think its significance is a glimpse into the future."

OpenAI ranks as one of the most exotic organizations on the planet, and can only be compared to Google's DeepMind, the London-based AI research firm run by Demis Hassabis. Its 120 employees are divided, in Altman’s words, into three very different “tribes”: AI researchers, start-up builders, and technology and safety policy experts. It shares offices in San Francisco with Neuralink, the future brain and computer interface.

Founded in 2015 with a $1 billion funding commitment from a number of entrepreneurs and leading tech companies on the West Coast, OpenAI prides itself on the insanely ambitious mission to advance Artificial General Intelligence for the benefit of all of humanity. Its early backers have included billionaire Elon Musk, founder of Tesla, volatile and moody SpaceX (which has since retracted OpenAI), venture capitalist Reed Hoffman, founder of LinkedIn, and Peter Thiel, chief investor in Facebook and Palantir.

OpenAI was initially founded as a not-for-profit company, and has since taken a more commercial approach than before and accepted an additional $1 billion investment from Microsoft last year. Structured as a 'limited dividend' company, it is able to raise capital and issue shares, which is essential if you want to attract the best researchers in Silicon Valley, while at the same time upholding its guiding overall mission without undue pressure from shareholders. “This structure enables us to determine when and how the technology will be released,” Altman says.

Altman took over as CEO last year, having previously ran YComputer, one of Silicon Valley's most successful start-up incubators that has helped spawn more than 2,000 companies, including Airbnb, Dropbox and Stripe. He says he was only tempted to give up this "dream job" to help tackle one of humanity's most pressing challenges: how to develop safe and useful artificial intelligence. "It's the most important thing I can ever imagine," he says. "I won't pretend I have all the answers yet, but I'm happy to put all my energy into trying to contribute in any way I can."

In Altman's view, the AI revolution now unfolding may be more important to humanity than previous agricultural, industrial, and computer revolutions combined. The development of artificial general intelligence will fundamentally reset the relationship between humans and machines, which could lead to the emergence of a higher form of electronic intelligence. At that point, in the words of Israeli historian Yuval Noah Harari, Homo sapiens will cease to be the smartest algorithm on the planet.

Altman believes that AI can transform human productivity and creativity, enabling us to tackle many of the world's most complex challenges, such as climate change and pandemics. "I think it's going to be an incredibly strong future," he says. But if managed wrongly, AI will only multiply many of the problems we face today: excessive concentration of corporate power as private companies increasingly take over the jobs once performed by state governments, widening economic inequality and narrowing opportunities, and the spread of information. Misleading and eroding democracy.

Some writers, such as Nick Bostrom, have gone so far as to argue that uncontrolled AI could pose an existential threat to humanity. “Before there could be an explosion of intelligence,” he said in a 2014 book, “we humans are like little children playing with a bomb.” Such warnings surely caught the attention of Elon Musk, who tweeted: "We need to be very careful with AI. Potentially more dangerous than nuclear weapons."

Concerns about how best to manage these powerful tools mean that OpenAI only released GPT-3 in a controlled environment. “The GPT-3 wasn't a model we wanted to put out into the world without being able to change how we applied things as we went along," Altman says. About 2,000 companies have now been given access to it in a private, controlled, controlled beta test. What they learn as they seek to explore the capabilities of the GPT-3 was fed to the model for further improvements. 'Mindfull', 'Shockingly good' and 'Fantastic' are just some of the reactions in the developer community.

David Chalmers, a professor at New York University and an expert in the philosophy of mind, went so far as to suggest that GPT-3 is sophisticated enough to show rudimentary signs of consciousness. "I am open to the idea that a worm with 302 neurons is a conscious organism, so I am open to the idea that GPT-3 with its 175 billion variable mediators is also a conscious organism," he wrote on the Daily News.

However, it didn't take users long to expose the dark sides of GPT-3 and its temptation to produce phrases that included racial and sexist language. Some fear it will only result in a seismic tidal wave of "semantic garbage".

If OpenAI detects any evidence of intentional or unintentional abuse, such as creating spam or toxic content, it can turn off the offending user and update its model behavior to reduce the chances of it happening again. “We can certainly turn a user off if they violate the terms and conditions — and we will — but the most exciting thing is that we can change things very quickly,” Altman says.

He adds: “One of the reasons we launched this as an API is that we can practice publishing in the areas where it works well, and the areas where it doesn't work — which types of apps work and where they don't. This is really a practice that's been put into practice for us to deploy powerful AI systems. for this general purpose.

These lessons should help improve the design and safety of future AI systems as they are deployed to chatbots, robotic caregivers, or self-driving cars, for example.

Despite its current performance which is impressive in many respects, there is a good chance that the real significance of GPT-3 lies in the capabilities it develops to generate the models to come after it. Nowadays it works like a super-complex autocomplete function, capable of correlating seemingly plausible word sequences without having any concept of comprehension. As Turing predicted decades ago, computers can achieve proficiency in many areas without gaining an understanding.

John Echimendi, co-director of the Stanford Institute for Human-Centered Artificial Intelligence, highlights the current limitations of even the most powerful models of language generation. He says it is true that GPT-3 has been trained to produce a script, but he has no intuitive understanding of what that script means. Instead, its results were derived from mathematical probability modeling. But he notes that recent advances in speech and computer vision systems could greatly enrich its capabilities over time.

"It would be great if we could train something on the multimedia data, whether it's text or images. The resulting system can then know not only how to produce sentences using the word 'red' but also the use of the color red. We can start building a system," he says. He has a real understanding of language rather than a system based on statistical ability."

What is GPT-3?

GPT-3, which stands for Pre-Trained Generative Transformers Version 3, is a very powerful machine learning system that can quickly generate text with minimal human input. After an initial prompt, he can recognize word patterns and repeat them to see what happens next.

What makes GPT-3 so incredibly powerful is that it has been trained on about 45 terabytes of text data. For comparison, the full English-language version of Wikipedia represents only 0.6 percent of the complete data set for the GPT-3 model. Or, to put it another way, GPT-3 processes about 45 billion times the number of words a person sees in their lifetime.

But although GPT-3 can predict with frightening accuracy whether the next word in a sentence should be an umbrella or an elephant, it has no grasp of the meaning. One researcher asked GPT-3: "How many eyes do I have feet?" GPT-3 replied, "You gave her two eyes."

Nabla Technologies, a healthcare data company, which examined how well GPT-3 provides medical advice, highlighted the potential for harm that could result from the current mismatch between ability and understanding. The company discovered that in one case, GPT-3 supported a placebo patient's desire to die by suicide. (OpenAI explicitly warns of the risks of using GPT-3 in such "high risk" categories).

Cases like this highlight the need for continuous human supervision of these robotic systems, says Shannon Valor, professor of data ethics and artificial intelligence at the University of Edinburgh: “Right now, GPT-3 needs a human babysitter at all times who tells it the kinds of things that He shouldn't say it. The problem is that GPT-3 isn't really smart. It doesn't learn the way humans do. There's no situation where GPT-3 realizes the inappropriateness of these particular sayings and stops using them. This is a clear and widening gap that I don't know how we're going to close. ".

She adds: “The promise of the Internet has been its ability to impart knowledge to the human family in a more equitable and acceptable manner. I fear that due to some technology, such as GPT-3, we are on the cusp of seeing a real decline, as shared information becomes increasingly unusable and even harmful. people when they get there.

Reed Hoffman, founder of LinkedIn and a member of the OpenAI board of directors, says the organization devotes a lot of effort to designing safe operating procedures and better governance models. To guard against bad outcomes, he suggests three things: cleaning up bad historical data that harbors societal biases within it, introducing some form of explainability into AI systems and understanding what needs to be corrected, and constantly checking a system's output against its original goals. "There is the beginnings of a lot of good work on these things. People are alert to the problems and working on them."

He adds: "The question is not how do you stop technology, but how do you shape technology. A missile is not inherently bad. But when you put a missile in the hands of someone who wants to cause damage and has a bomb, it can be very bad. How do we deal with this properly? How? What does the new treaties look like? What does the new monitoring look like? What kind of technology are they building or not building? All of these things are very present and are very active questions at the moment."

Asking such questions undoubtedly shows goodwill. However, answering them satisfactorily will require unprecedented advances of imagination, collaboration, and effective implementation among the shifting alliances of academic researchers, private companies, national governments, civil society, and international agencies. As always, the danger is that technological developments will outpace human wisdom.